LipSynth: Hackathon Submission for Bitcamp 2022

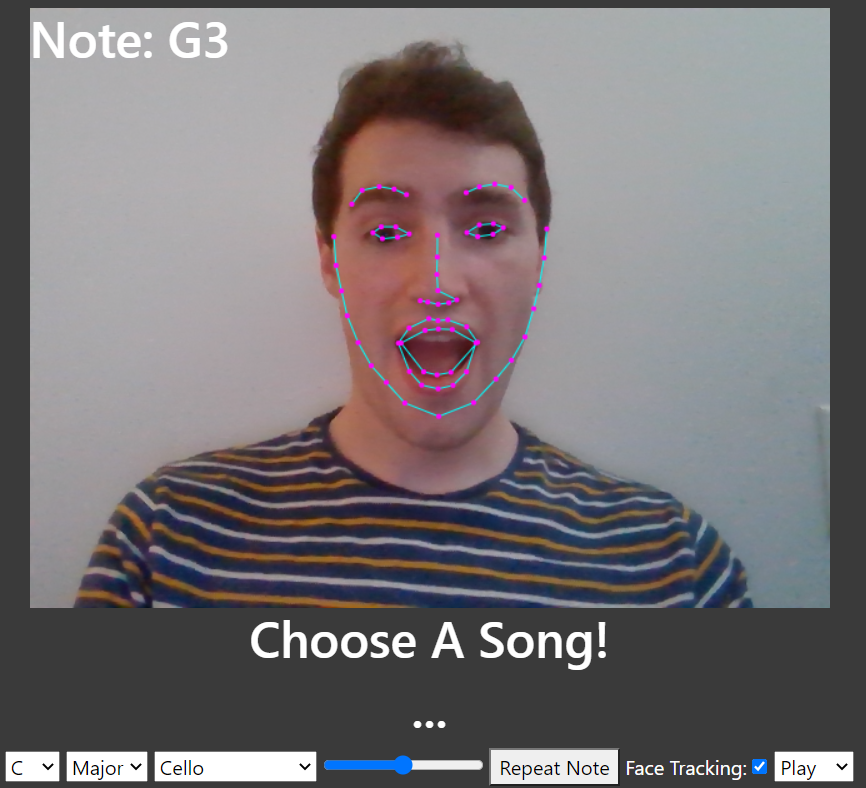

Introducing LipSynth: a silly browser-based karaoke/rhythm game that you play with your face! Built for Bitcamp 2022.

Bitcamp was this past weekend! Bitcamp is UMD’s largest annual hackathon and usually draws in over 1000 participants. This year was the 8th anniversary aka “Bytecamp”. I was on a team along with my friends Ryan and Andoni, and we decided to throw our hat in the ring with a project using facial tracking, synthesizers, and the Spotify API. It ended up winning an MLH award!

Introducing LipSynth: a silly browser-based karaoke/rhythm game that you play with your face! Click here to try it out now (requires webcam) or check out our official devpost submission.

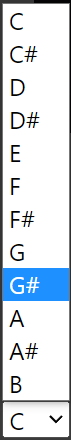

LipSynth uses a convolutional neural network to detect 68 keypoints across your face. It then maps your expression to a musical note and plays it using your selected instrument and key in real time! Wider mouth = higher pitch.

You can play karaoke by first logging in with your Spotify account and then entering a song name. A visual indicator will show how to change your face to play the correct note, along with another indicator for the next note in the song. It can be quite challenging!

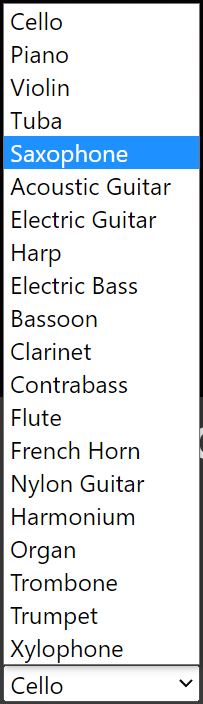

LipSynth will automatically adjust key signature to match the song, but feel free to choose whatever instrument suits your fancy 🙂

We built it over the course of the 36 hour hackathon using Node.js, face-api.js, Tone.js, and the Spotify API. Unfortunately, the Spotify API does not have completely accurate note data for every song. For most tracks it attempts to analyze the waveform directly, which generally does a decent job. The whole thing is hosted on a free heroku container.

Huge credit to Ryan and Andoni for pulling some late nights to get this finished. Check them out too:

- Ryan Druffel: github.com/ryan-druffel, thefriendlynextdoorneighbor.gitlab.io

- Andoni Sanguesa: github.com/AndoniSanguesa